Introduction to Algorithmic Thinking in python

Algorithmic programming is about solving problems. It involves designing, writing, and testing algorithms to solve specific tasks or challenges. An algorithm is a step-by-step procedure or formula for solving a problem like a food recipe.

Why learning Algorithmic Programming?

Every piece of software, from the simplest calculator app to the most complex machine learning model, is built upon algorithms. Without algorithms, computers wouldn't know how to perform even the most basic tasks 🤖

Two programs might solve the same problem, but one might do it in a fraction of the time or using a fraction of the resources. This efficiency often comes down to the algorithms and data structures chosen by the programmer. In many real-world scenarios, such as financial trading or medical imaging, efficiency can have significant implications.

Problem-Solving Skills

Algorithmic programming hones your problem-solving skills. It teaches you to break down complex problems into manageable parts, think critically, and approach challenges methodically. These skills are valuable in many areas of life, from planning a vacation to managing a business 🔥

Most of tech companies assess algorithmic and problem-solving skills during their hiring processes like you can see on youtube. Being proficient in algorithmic programming can give you an net advantage in job interviews and career advancement.

By the way many groundbreaking advancements in technology, from search engines to genome sequencing, have been made possible by innovative algorithms. Mastery in algorithmic programming can position you to contribute to such advancements.

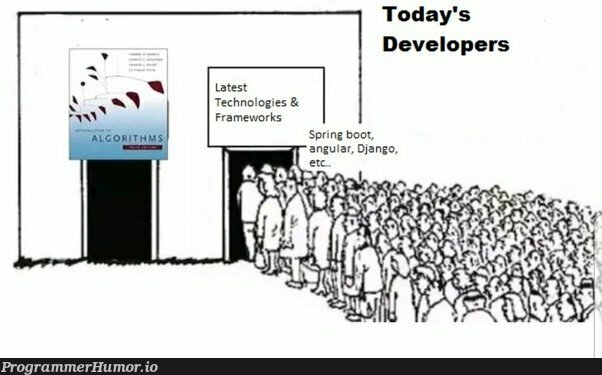

Here a little meme if you are not convinced yet 😎

Introduction

Imagine you have a big box of LEGO pieces, and you want to build a castle. If you just start sticking pieces together without a plan, it might not look like a castle at all. But if you have step-by-step instructions (a plan) to follow, you can build a beautiful castle.

Algorithms are like those step-by-step instructions for computers. They tell computers how to do things, like how to find the biggest number in a list or how to sort a bunch of names in alphabetical order and many more complex things.

Today, we use computers for almost everything - from playing games to helping doctors in hospitals. For computers to be helpful, they need good algorithms. If the algorithms are slow or wrong, computers might take a long time to do something or even make mistakes. That's why it's important to learn how to write good algorithms 🤓

When looking at an algorithm, you need to ask 2 things 🤔

- Does it address the issue at hand?

- Is it resourceful in its approach?

If your programming doesn't fix the issue or isn't resource-savy (like taking too much time or hogging memory), then it's not truly beneficial.

This is the reason we need to learn algorithmic programming best practices, to ensure our programming is rooted in effective and efficient strategies. We want confidence that our approach works well in all scenarios, or at least be aware if there are occasional shortcomings.

Even if your goal is merely to use pre-existing functions and not craft algorithms from scratch, it's essential to understand the underlying algorithms and data setups. Since no single data setup is perfect for every task, understanding their pros and cons is crucial.

Different Types of Algorithms

Let's think of algorithms like tools in a toolbox. Just as you wouldn't use a hammer to screw in a nail, different problems require different algorithms. The environment in which an algorithm runs can also influence the choice. For example, an algorithm running on a powerful server might be different from one on a small device with limited resources.

Historical and Personal Preference can also influence the choice of algorithms. Sometimes, developers or industries might prefer certain algorithms because they are more familiar with them or have used them historically. Like specific Use Cases can influence the choice of algorithms. Some algorithms are designed for very specific scenarios or niches. For example, certain algorithms are tailored for graphic processing, while others might be designed for financial calculations.

- Sorting Algorithms: Imagine you have a deck of cards, and they're all mixed up. Sorting algorithms help put those cards in order, like arranging them from the smallest number to the biggest.

- Searching Algorithms: Let's say you're playing hide and seek. Searching algorithms help the seeker find where the hiders are hiding, but in the computer world, it's about finding a piece of information in a big list.

- Pathfinding Algorithms: Imagine you're in a big maze and need to find the exit. Pathfinding algorithms help find the quickest way out of the maze. They're used in things like GPS to give you driving directions.

- Recursive Algorithms: This is like when you open a box, and inside that box, there's another box, and inside that one, there's yet another box, and so on. Recursive algorithms solve big problems by breaking them down into smaller versions of the same problem.

- Divide and Conquer Algorithms: Imagine you have a huge puzzle to solve. Instead of trying to solve the whole thing at once, you divide it into smaller parts, solve each part, and then put them all together. That's what these algorithms do.

- Greedy Algorithms: These are like when you're collecting coins, and you always pick the biggest coin first to collect the most money quickly. Greedy algorithms always make the choice that seems best at the moment.

- Brute Force Algorithms: Imagine you're trying to open a combination lock, but you don't know the combination. Brute force algorithms try every possible combination until they find the right one.

- Randomized Algorithms: These algorithms use randomness to solve problems. For example, you might use a randomized algorithm to shuffle a deck of cards or to find the shortest path between two points.

- Backtracking Algorithms: Imagine you're trying to find your way through a maze, but you keep getting stuck. Backtracking algorithms help you find a way out by trying different paths until you find one that works.

- Dynamic Programming Algorithms: These algorithms solve big problems by breaking them down into smaller versions of the same problem. They're like recursive algorithms, but they store the results of each subproblem so they don't have to be solved again.

- Hashing Algorithms: These algorithms take a piece of data and turn it into a shorter piece of data. They're used in things like password storage and data compression.

Some key concepts in Algorithmic Programming

Time Complexity

This refers to the amount of time an algorithm takes to complete as a function of the length of the input. It's crucial for understanding the efficiency of algorithms. We often measure time complexity in terms of Big O notation, which describes the worst-case scenario.

Space Complexity

This is about understanding how much memory an algorithm uses. In many scenarios, especially in devices with limited memory, space efficiency is as crucial as time efficiency.

Data Structures

These are ways to organize and store data so that it can be accessed and modified efficiently. Common data structures include arrays, linked lists, trees, and graphs. The choice of data structure often affects the efficiency of an algorithm. For example, some algorithms are faster when using arrays, while others are faster when using linked lists.

You can see on the image below the time and space complexity of some common data structures 🧐

An \(O(log(n))\) example

Let's say we have a problem of size \(n\). Now for each step of our algorithm (which we need to write but do not be affraid 😂), our original problem becomes half of its previous \(size(n/2)\). So, if we keep on dividing our problem into half at each step, we will reach a problem of size 1 in \(log(n)\) steps. So, the time complexity of our algorithm is \(O(log(n))\).

For many people, this is counter-intuitive. How can we find an element in a sorted list of size \(n\) in \(O(log(n))\) time? Let's see how we can do this 🔥

Considering the following problem:

L is a sorted list containing \(n\) signed integers (with \(n\) being big enough), for example L = [-5,-2,-1,0, 1, 2,4] here, \(n\) has a value of 7. If L is known to contain the integer 0, how can you find the index of 0 ?

Naïve approach \(O(n)\)

The first thing that comes to mind is to just read every index until 0 is found. In the worst case, the number of operations is n, so the complexity is \(O(n)\).

This works fine for small values of \(n\), but is there a more efficient way ? Of course we call it Dichotomy

Consider the following algorithm in Python :

a = 0

b = n-1

while True:

h = (a+b)//2 ## // is the integer division, so h is an integer

if L[h] == 0:

return h

elif L[h] > 0:

b = h

elif L[h] < 0:

a = h

a and b are the indexes between which 0 is to be found. Each time we enter the loop, we use an index between a and b and use it to narrow the area to be searched.

In the worst case, we have to wait until a and b are equal. But how many operations does that take? Not \(n\), because each time we enter the loop, we divide the distance between a and b by about two. Rather, the complexity is \(O(log(n))\).

Note: When we write \(log\), we mean the binary logarithm, or log base 2 which we will write \(log_2\)

In the next section we will dive into all the different types of algorithms and how to implement them in python 🐍